AI and the Importance of Ethics in the Workplace

Ashley McDonald

Upon successful completion of this chapter, learners will be able to do the following:

- Recognize what generative AI is and how it is used in legal practice.

- Identify common risks associated with using generative AI.

- Review steps to assess the accuracy and validity of AI-generated legal content.

- Outline the key ethical responsibilities of legal professionals when using AI.

Introduction

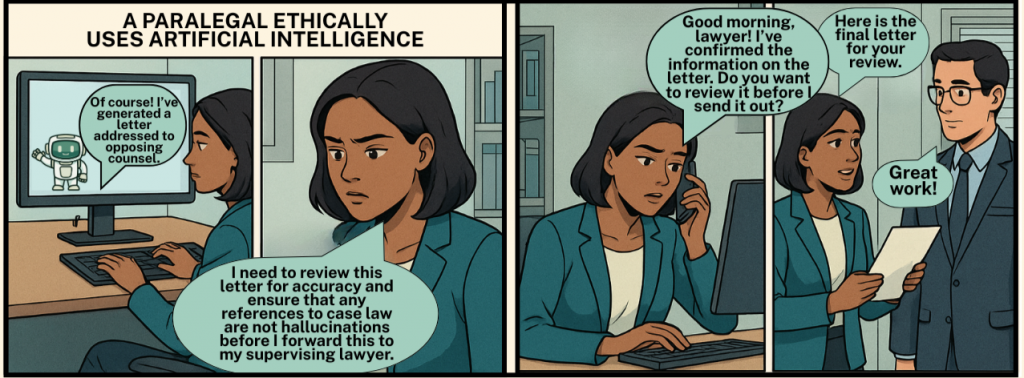

This chapter will focus on the uses of AI in the workplace and the importance of ethics when using AI tools in the legal field. AI is a new and evolving technology, and it is important to know what generative AI is, what its potential uses are in the law office, and what the law society rules are when it comes to using AI for tasks. It is also important to understand the ethics involved in using generative AI and the consequences it can have when misused.

What is Generative AI?

Generative artificial intelligence, or Generative AI, is defined by the Law Society of Alberta (2024) as being a “type of artificial intelligence that can create new content, such as text, images, or audio, based on some input or prompt” (p. 1). Simply typing in precisely what you would like the AI tool to do and it will create that within minutes of receiving your instructions. One way to think of how generative AI technology works is that there are three stages: the input, analysis, and output (Queen’s University, 2025).

This technology can drastically improve the overall efficiency of a task, as it will take minutes to complete a task that could have otherwise taken hours or even days to complete, depending on the complexity. AI can be used for many things in the law office, including drafting documents or correspondence and researching a legal issue. The Law Society of Alberta suggests that generative AI can be used to proofread documents, summarize complex documents, and generate ideas for writing a document.

Although AI can improve the overall efficiency of work, it also has drawbacks and risks that must be considered. The University of Alberta (2025) lists some of the issues to consider when using Generative AI, such as:

- Hallucinations: This term describes when AI produces information that does not exist. The AI creates false cases that relate to the information it was given. It is important to fact-check the cases that the AI produces to ensure that they are real and not AI hallucinations.

- Errors: When using AI, it is important to check for errors, as it can produce inaccurate or biased information depending on the given information.

- Tasks: Although generative AI is seen as a tool that can do virtually anything, it does have its limits. There may be some tasks that the AI cannot complete, depending on the advancement of the program you are using.

It is important to keep these points in mind as you explore the uses of AI and how it can benefit you and the work you conduct. Generative AI can simplify tasks and increase efficiency. However, it is also important to double-check the information it produces, as there are risks and limitations to what it can do.

How To Use Generative AI

Using Prompts with Generative AI

AI is a new and evolving technology, constantly changing as technology advances. It may seem like a daunting task to figure out how to use AI and how to utilize it in a law office; however, there is a quick guide created by the University of Alberta (2025) that can be used to assist in creating the right prompts to generate the information needed.

The University of Alberta (2025) recommends using the 5P method. The 5Ps each represent a category that can be used when writing a prompt for the generative AI to produce information. The 5Ps are as follows:

Prime

The first “P” in the method stands for “Prime.” This is best used when giving generative AI a task by giving specific information such as facts, jurisdiction, and a time frame. This can best be used to find cases or legislation related to your fact scenario. See the following examples:

- The client was involved in an armed robbery of a bank in Edmonton…

- The Residential Tenancy Act in Alberta and how it relates to tenants wanting to end their lease early due to…

Prompt

The second “P” stands for “Prompt” and is best used when you want to give the AI tool specific instructions on a task. This is useful when wanting to do one of the following examples:

- Compare and contrast different legislation.

- Summarize case decisions.

- Draft legal documents, such as a memorandum.

Persona

The third “P” stands for “Persona” and should be used when you want the AI tool to take on a role in generating information from the prompt that you give it. Examples of this include:

- Write from the perspective of opposing counsel in a matter involving…

- Generate an email to opposing counsel in a formal tone requesting…

Product

The fourth “P” stands for “Product” and should be used when you want a specific output for the AI to produce. See the following examples:

- A letter to a client explaining…

- A 1,000-word summary…

Polish

The last “P” stands for “Polish” and is used when you want to refine the results of the information that the AI tool has given you. This can be useful in almost all of the prompts you give AI, as it is intended to narrow down the results to receive the desired end product. Examples of this include:

- Contrast this information with…

- This only includes information relevant in Ontario’s jurisdiction. Is there relevant information in Alberta’s jurisdiction?…

Using the 5P method suggested by the University of Alberta can drastically improve how you use AI, depending on what type of information you need to obtain. For more information on using AI in legal research, follow the University of Alberta’s Generative AI for Legal Research guide.

Assessing Generative AI Information

After obtaining the information provided by the AI tool, it is important to learn how to assess the information to validate its accuracy properly. As discussed previously, AI has limitations and can produce hallucinations, errors in data, and potentially biased information. So, although it can be a helpful tool when conducting tasks, it is important to know how to assess the accuracy and validity of the information the AI is giving you. Queen’s University (2025) suggests using five steps to measure the AI-generated content. The steps are as follows:

Assess System Limitations

This step analyzes how the AI system works, which in turn helps us understand how it generates the data it gives us. One way to look at this is by using the three-layer model (Queen’s University, 2025). The three layers comprise the input, analysis, and output layers. The input layer consists of the data on which the system operates. Consider how current the data the system uses is and whether there is any human bias in the data. The second layer is the analysis layer, where the system interprets the data given. Again, be sure to consider if there is any bias in the data and whether the system tells you how it analyzes the data. The last layer is the output layer, which is the result that the system gives you after inputting the initial data. Consider again if there is any bias in the AI data, if anything is missing from the results, and if you can train the AI to help it learn how to perform tasks.

Overall, assessing the AI system itself is important to ensure that it will give you accurate, current, and non-biased data. The three-step method suggested by Queen’s University is an excellent way to assess the system you are using.

Verify Information

As mentioned previously, AI tools can generate hallucinations, which are inaccurate information or cases that do not exist (Queen’s University, 2025). It is important to double-check the sources and information the AI tool gives you to verify that the cases and information exist. One way to do this is to find the information in a legal database such as CanLII or Westlaw, review the source to ensure the reference is accurate, and then note the source to refer to in your reference guide.

Compare with Other Sources

Although generative AI is helpful, conducting separate research to find your own information is important, as you should not rely solely on the AI content as sources that are behind a paywall, such as those found in legal databases (aside from CanLII), will not be found using a non-AI platform. With this step, be sure to research legal databases such as CanLII and Westlaw to find additional information that can help aid or verify your legal research.

Update for Currency

Do not assume that the information given to you by the generative AI is current (Queen’s University, 2025). Not all systems will rely on the most current information, so it is important to do additional research to ensure that the data is current or if there is a new law or information that should be referenced instead. Some AI platforms may show how current the information is, but this is not always the case, so additional research may be necessary.

Take Steps to Address Bias

The last step that Queen’s University (2025) suggests is to check to see whether any bias is evident in your given data. It is important to check for bias in your initial prompt as well as within the output—both can affect the outcome of the results.

These steps suggested by Queen’s University can help in the process of using AI tools. AI can help streamline legal research, but it is important to ensure that the information you are given is valid, accurate, and free from error and bias. Following the above steps will aid in that process to ensure that the information given is accurate. AI is an effective tool, but it should not be the only source of information used when conducting research. It is still important to fact-check the information you are given and to do your additional research.

AI Databases

Many different types of generative AI databases can be used, and each has advantages and disadvantages depending on the task you need completed. Before using a platform to conduct your task, ensure that it is reliable and can accurately complete the task you need. In addition, it is important to note that some platforms require a subscription. If your law firm has a subscription to a generative AI platform, taking advantage of this tool is beneficial. However, if your law firm has no subscription, numerous platforms are free for public use. Below is a list of different platforms that can be used.

| Free Platforms | Subscription Required Platforms |

| ChatGPT | Lexis+ AI | Legal Research Platform + AI Assistant | LexisNexis |

| Scribe – Free version, but also paid versions with more features. | Alexi |

| Microsoft CoPilot – Free version, but also paid versions (Microsoft 365 subscription) | CoCounsel |

| Canadian Legal Information Institute | CanLII | Westlaw Edge (AI-Enhanced) |

Inequality in Access to AI Platforms

Although there are many options for AI platforms to use, there is much discussion about the inequality regarding access to AI platforms. Accessing legal-specific AI services comes at a cost, and smaller firms most often cannot afford these extra expenses (James, 2025). Regardless of AI platform choice, it is important that whichever platform a firm uses, whether it is free or requires a subscription, the service should be researched and tested by the firm to ensure that it produces accurate information. Although there are free platforms, such as ChatGPT, Grok, etc., these tools may not be as accurate nor as comprehensive or intelligent in response to your query as some of the platforms that require a fee (James, 2025). This inevitably creates a divide not only between “Big Law” firms and smaller boutique operations but also for self-represented individuals who cannot afford a lawyer and are seeking alternative means for legal advice. Although outside the scope of this chapter, Rachel Paterson (2024) wrote a compelling article discussing the digital divide that the rise of AI tools in the legal profession has on self-represented litigants. Paterson (2024) states that the rise in AI “is causing the digital divide to widen at an unprecedented rate, which has concerning implications for people who self-represent, and access to justice as a whole.”

AI and the Importance of Ethics

Ethics in law refers to a “code of conduct and professional responsibilities that govern lawyers’ behaviour” (Pathfinder Editorial, 2024). Legal ethics is the most crucial aspect of the Canadian justice system. This system is the guiding force that all legal professionals should follow. Legal ethics ensures that lawyers prioritize their clients’ interests, ensure justice is upheld fairly and impartially, establish trust with client confidentiality, act with diligence and competence, and promote the public’s trust in the Canadian justice system (Pathfinder Editorial, 2024). The Law Society of Alberta has a Code of Conduct to which all lawyers practicing in Alberta must adhere. The Law Society of Alberta’s Code of Conduct enforces the above-mentioned points that legal professionals must follow to enforce ethics in the legal field.

Knowing the importance of ethics in the legal field and the standards that legal professionals hold helps us understand how ethics relates to AI use in the legal field. There have been cases where the improper use of AI has led to errors during the court process that could have been avoided if the AI tool had been used correctly.

A case in Ontario described how a judge discovered a submission that was filed with the court that was written with AI (Draaisma, 2025). This lawyer relied on court materials produced by AI and cited fictitious cases and irrelevant cases that did not relate to the criminal law in question (Draaisma, 2025). The judge had ordered the lawyer to prepare new submissions and resubmit them to the court, and instructed that the lawyer must pinpoint the information being referred to in the case citations and check the citations, ensuring they have a link to CanLII (Draaisma, 2025). Another case, Ko v Li, 2025 ONSC 2766, also dealt with the repercussions of improperly using AI. The lawyer in this case submitted a factum with hyperlinks to CanLII that were inaccurate and did not link to relevant cases and displayed error messages. In addition, when asked to provide opposing counsel with citations and copies of the cases referenced in the factum, the lawyer could not provide the documentation. A third case Halton (Regional Municipality) v Rewa et al., 2025 ONSC 4503, found one of the parties submitting a factum that relied on fictitious cases. It was suggested that the party used AI to generate factum and that it had produced hallucination cases, and as a result, the party admitted to using AI because they were unable to hire counsel to assist in the matter. The Justice stated that all parties, whether self-represented or not, must verify the cases they are relying upon before submitting to the court. The hearing was adjourned with costs against the party, giving them additional time to amend their submissions.

In the first two cases noted above, the lawyers were not seriously punished for their negligence in not fact-checking their research, but the party in the third case, who was self-represented, was ordered to pay costs (a surprising revelation, given to this date, many of the lawyers misusing AI have not received the same). The Code of Conduct guides the lawyers’ behaviours to ensure that justice is upheld and that they prioritize their clients’ interests (Pathfinder Editorial, 2024). The fear is that if a lawyer does not check the work that AI has generated and it has serious errors or even hallucination cases, this incorrect information could cause a miscarriage of justice (Draaisma, 2025). This could be detrimental to the client who entrusted their lawyer to effectively represent them on their legal issues, as well as potentially paying thousands of dollars to represent them in their case accurately. Having the lawyer not uphold their duty to their client and present inaccurate information to the judge could not only create a miscarriage of justice, but it can also negatively impact their client’s life. As stated in the case of Ko v Li, lawyers must prepare and review material competently, not to fabricate or miscite cases, read cases before submitting them to the court, review information produced by artificial intelligence, and not to mislead the court. Given that lawyers are held to these standards, it is surprising that neither of these lawyers was sanctioned for their negligence, but the self-represented party was.

This begins a conversation about how these types of situations should be handled and raises questions not only in ethics but also about competence, supervision of staff, and the proper administration of justice. Furthermore, should judges be responsible for fact-checking and verifying all written materials submitted by lawyers, including checking for AI-generated content? Is this a reasonable use of public resources and an effective way to manage workload in the legal system? The answer to how AI use should be managed, and what consequences should exist for unethical use, is still being worked out by the courts. It will be interesting to see how this evolves over time, especially as AI tools become more advanced and capable of completing complex tasks with increasing speed.

AI Policies in the Workplace

With the use of AI becoming more prevalent in the workplace, it should be used responsibly in a professional legal setting. One way to ensure that all employees within a work setting are using AI responsibly is to develop an AI policy within the law firm so that everyone knows how to use AI appropriately (Law Society of Alberta, 2024). If your firm does not already have a policy in place, the Law Society of Alberta has a reference guide on what information should be included in this policy. Within the Law Society of Alberta’s (2024) guide, they suggest including:

- Examples of Permitted Use – Explain what an employee can use generative AI for and give examples of what this may look like.

- Examples of Prohibited Use – Explain what an employee should not use the generative AI tool for.

- Liability – What happens if an employee inappropriately uses the AI?

- Disclosure – Be open with clients when generative AI is used in their case.

- Monitoring – Have a clause stating that the firm can monitor how AI is used.

- Confidentiality and Privacy – Have a clause that states the importance of confidentiality and privacy of client information. Ensure that sensitive information does not get breached.

These are just a few examples of clauses that can be implemented into a law firm’s policy on the use of AI. Each firm will have its own policies that its employees must follow. What should remain the same, however, is that the AI is used responsibly and the client’s interests are at the forefront, and they can rely on the services they are paying for. The Law Society of Alberta has several suggestions for implementing an AI policy in a law firm.

Summary

AI is a rapidly evolving technology that is increasing in use. This is a tool that, when used effectively, can drastically improve work output, but it must be used appropriately to ensure that the information produced is accurate. At the forefront, the clients’ interests should be the number one priority; they are entrusting the law firm to help them with their legal matters during a time of need, so they should get the quality service they are paying for. Using generative can be a great tool to improve the work needed for the client’s case, but it must be used appropriately to protect their interests.

Reflection Questions

- Can you think of a situation in which relying too heavily on generative AI might create risk for a legal professional or their client? What would be the consequences?

- After reading about the cases where lawyers submitted hallucinated case law, how do you think legal regulators should respond to such incidents?

- How can a law firm balance the efficiency offered by AI with its obligation to act ethically and competently for clients?

- As a paralegal or legal assistant, how might your use of generative AI tools, both responsibly and irresponsibly, impact the lawyer you work with? Consider how your actions could influence the lawyer’s reputation, ethical obligations, or the outcome of a client’s matter.

Short-Answer Questions

- What is one major benefit of using generative AI in a law office?

- What are two common risks of relying on generative AI for legal research?

- What does the “Prime” step in the 5P method involve?

- Why is it important to verify AI-generated information using legal databases like CanLII or Westlaw?

Model Answers

- What is one major benefit of using generative AI in a law office?

- It can improve efficiency by completing tasks such as drafting documents or summarizing legal information much faster than manual methods.

- What are two common risks of relying on generative AI for legal research?

- Hallucinations (false information) and errors or biases in the output.

- What does the ‘Prime’ step in the 5P method involve?

- Providing specific facts, jurisdiction, and time frames to help the AI tool generate relevant results.

- Why is it important to verify AI-generated information using legal databases like CanLII or Westlaw?

- Because AI may fabricate or misquote cases, and legal databases provide accurate, verifiable sources.

References

Canadian Professional Path. (2024, February 14). Legal ethics: The pillars of Canadian law. https://canadianprofessionpath.com/legal-ethics/

Draaisma, M. (2025, June 3). An Ontario judge tossed a court filing seemingly written with A.I. Experts say it’s a growing problem. CBC. https://www.cbc.ca/news/canada/toronto/artificial-intelligence-legal-research-problems-1.7550358

Halton (Regional Municipality) v Rewa et al., 2025 ONSC 4503. https://canlii.ca/t/kdn3w

James, H. (2025, May 2). Access and equity in legal AI: What about the rest? 9twelve Legal Research + Consulting. https://www.9twelve.ca/blog/blog-post-title-two-3k9bx

Ko v Li, 2025 ONSC 2766. https://canlii.ca/t/kbzwn

Law Society of Alberta. (2024, August 30). How to use generative AI in your legal practice: A guide for lawyers and staff. Retrieved June 26, 2025, from https://documents.lawsociety.ab.ca/wp-content/uploads/2024/09/23082737/How-to-Use-Gen-AI-in-Your-Legal-Practice.pdf

Paterson, R. (2024, June 5). A digital wolf in sheep’s clothing: How artificial intelligence is set to worsen the access to justice crisis. National Self-Represented Litigants Project. https://representingyourselfcanada.com/a-digital-wolf-in-sheeps-clothing-how-artificial-intelligence-is-set-to-worsen-the-access-to-justice-crisis/

Queen’s University. (2025, July 11). Critically assessing AI-generated content. https://guides.library.queensu.ca/legal-research-manual/critically-assessing-generative-artificial-intelligence

Queen’s University. (2025, July 11). How does GenAI work? https://guides.library.queensu.ca/legal-research-manual/introduction-generative-artificial-intelligence

University of Alberta. (2025, February 11). Generative AI for legal research. https://guides.library.ualberta.ca/generative_ai_legal_research

A type of artificial intelligence that creates new content such as text, images, or audio in response to a prompt.

The phenomenon where AI generates fabricated or inaccurate information, such as fictitious case law.

A structured approach to prompt engineering, consisting of Prime, Prompt, Persona, Product, and Polish, by the University of Alberta.

Systematic error or skewed results generated by AI due to the nature of the data it was trained on.

A legal professional’s duty to act competently, ethically, and in the client’s best interest.

A workplace policy that outlines permitted and prohibited uses of AI, along with clauses on privacy, liability, and disclosure.